Today's Agenda

Hello fellow humans! Today, we’re all about value. Can AI do economically valuable work yet? What is the value of the hidden AI costs in our energy grid? What is the value of OpenAI’s new Study Mode? And the first in a series discussing AI in education and how to understand what current students will find valuable when they enter an AI-native workforce.

It’s all an experiment, so let’s get started!

News

Can AI Really Do Valuable Work?

OpenAI published a new study called GDPval aiming to assess the current economic viability of AI. This study is new and different in that it specifically targets AI performing real-world economically valuable work as evaluated by professionals with an average of 14 years of experience in their respective industries. The study spans 44 occupations in the top 9 most economically valuable industries. The AI models performed 1320 tasks, and were rated by human professionals and an experimental automated grader.

Here are is their list of occupations.

GDPval 2025 Occupations Tested

This is the first objective look at current AI capabilities in a broad range of real-world work tasks. Some of the deliverables included:

Legal brief

Engineering blueprint

Customer support conversation

Nursing care plan

Evaluators all developed detailed rubrics for grading each submission and gave each deliverable a score of “better than,” “as good as,” and “worse than” as compared to their expectations for an industry expert performing the work.

They tested the work of all the frontier models — GPT‑4o, o4-mini, OpenAI o3, GPT‑5, Claude Opus 4.1, Gemini 2.5 Pro, and Grok 4 — and compared them to the work of human professionals.

These were all one-shot tests, so there was minimal context provided, and no conversation with the AI systems to clarify or provide nuance.

The bottom line result is that the frontier models are approaching the quality of work produced by industry experts.

Takeaways

The time of easy wins and breakneck releases may be coming to an end as the improvements are becoming less frequent, more incremental, and less exponential. AI may be reaching the limits of of what a single language model can do.

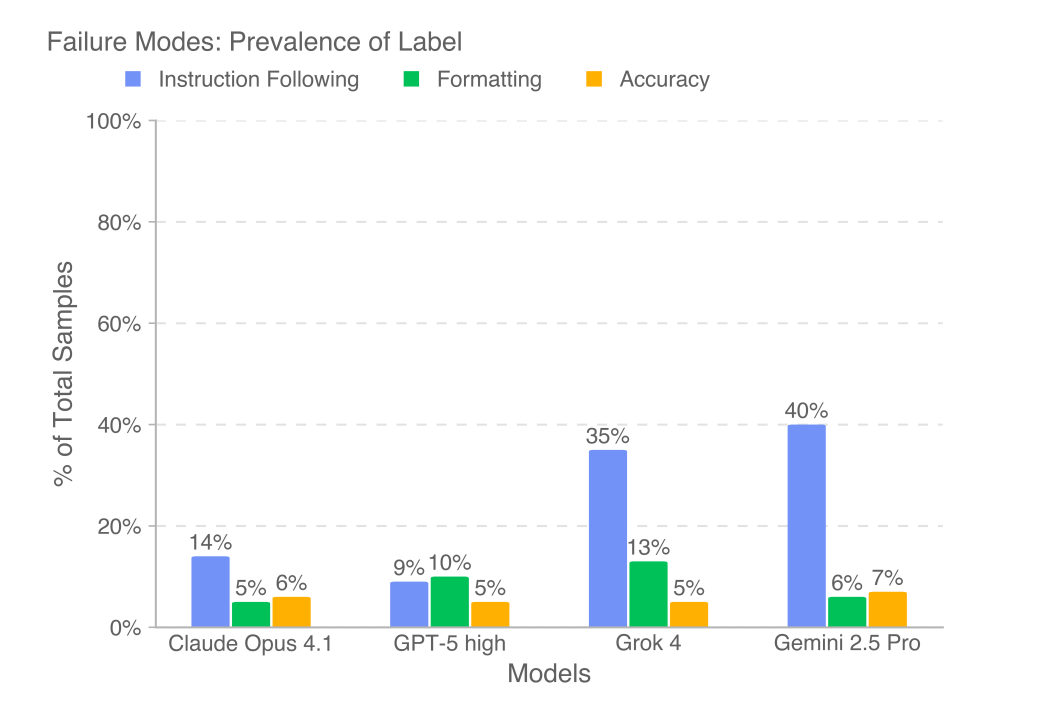

The most common failure mode for all of these models was for failing to follow instructions, with some even failing to produce a deliverable. As humans improve at working with generative AI and as models add layers of logic and checks, this will be a mostly solved problem in the near future.

This targets the most economically valuable knowledge work, and so in that sense, it is primarily an economic study of AI meant to assure investors with billions of dollars in AI development that their bets will pay off. If that’s what you’re looking for, you can certainly find that in this report.

Language models are unquestionably faster than humans — work than takes days or weeks of expert effort can be accomplished in minutes using these frontier models. But the work is uneven, unpredictable, and requires human supervision.

The quality of work is approaching industry experts, but is still not quite there.

The Achilles Heel of AI

AI is starting to present a new risk: energy demand. OpenAI recently released their study showing that language models are increasingly able to supplement or even replace knowledge workers, but the cost is a staggering demand for energy to run the new AI data centers.

But time is against us. While tech giants are working to build new nuclear energy capacity, these are at least 10 years away with no guarantee that the novel reactor design will be secure, cost-effective, or even work. President Biden’s Department of Energy committed to tripling nuclear output by 2050 — in 25 years. New natural gas turbine generators are struggling with supply chains and are looking at 3-7 years of lead-time to come online. Solar farms take about 2-3 years to come online, and wind farms can range from a few years to over a decade. The bottom line is that there is no fast track to new energy capacity.

Data centers tend to be concentrated in a few areas like California, Texas, and Virginia. But power grids tend to be regional, so residents across entire regions sharing the grid with these data centers are feeling the impact. A Bloomberg News analysis found that residents near data centers found monthly electricity costs rising as much as 267% from what they were five years ago.

Bloomberg analyzed the PJM Interconnection that spans from Illinois to Washington D.C., and estimates that data centers across the region have paid an additional $9.3 Billion due to rapid development of AI data centers.

There may be some capacity solutions in the pipeline, but they’re all still years away at best. Whether or not this massive AI bet will pay off in the end is still to be seen.

ChatGPT Study Mode

OpenAI recognized some of the issues that AI presents for students and launched Study Mode in late July. Study Mode is a more interactive version of ChatGPT intended to help students “work through a problem step-by-step, instead of just getting an answer.”

So how well does it actually tutor students and support effective learning? Learning coach Justin Sung gives us some fascinating insights. Here are some highlights from his findings:

Great for advanced learners who are capable of understanding their knowledge gaps and why they think the way they do

More conversational that standard GPT and great for interactions

Psychological safety — you can ask any question without fear of embarrassment

It is not capable of tailoring interactions to the user’s cognitive or education level

Users have to drive the conversation, so requires a certain level of initiative

I strongly recommend watching the video linked above to fully grasp his perspective and findings. I especially appreciated his explanation of students’ need for a mental model of a subject and how Study Mode requires a lot of effort from the student to be able to help shape their mental model. He says:

Most of the benefit you get from learning a topic, and the way the topic becomes easier for you is not in understanding more facts and concepts, it’s about getting a way to think about that topic.

It takes a bit of time and effort to create that mental model. A great teacher is able to facilitate that process.

Sung says that Study Mode can do this, but the student has to drive it to this behavior, rather than having this behavior result naturally from the student’s confusion. From his testing, he concludes:

The type of learner I was when using ChatGPT made the biggest difference than anything … using ChatGPT as an active, engaged, metacognitive, reflective, higher-order learner without study mode is vastly more effective than using it with study mode while being a passive, disengaged learner.

Check out the video for his tips on how to get the most out of Study Mode. But the bottom line is that unless you are already an active, engaged, higher-order thinking learning, Study Mode will not be very helpful, and may even be frustratingly slow.

Education: The Challenge We Face

Terminology

Artificial Intelligence (AI) is a broad term encompassing tools like Machine Learning (ML), Large Language Models (LLMs), and systems like AlphaGo or IBM’s Deep Blue. While Artificial General Intelligence (AGI) remains speculative, the most immediate impact on education comes from generative AI systems like ChatGPT, Claude, Gemini, and DeepSeek. These tools are increasingly used by students and require educators to adapt.

Throughout this series, I’ll use “LLM” and “generative AI” interchangeably, though generative AI can include more than just language models. But these models will be the focus in this series because of their high usage rate among students.

A new school year has begun and it is harder than ever for teachers to know whether assignments are original student work or outsourced to AI. There have been some common tip-offs that educators have used ot identify student work: typos, injected personal details, occasional awkward phrasing, distinct slang, and a recognizably human writing style. Educators have also learned to look for some AI slop red flags like regular use of em dashes, over-the-top expressions like “ever-evolving,” “game-changing,” or “you can’t help but feel…” The fact that they’re common clichés makes them more likely to be used by language models.

But AI tools are popping up everywhere and for every purpose; those writing style tip-offs are no guarantee; there are AI tools that mimic a student’s personal writing style, inject typos, or even rewrite content so that it seem more “human.”

This is not just some moral panic. Research from the MIT Media Lab shows that students who rely on generative AI for writing assignments exhibit lower brain activity in the neural regions tied to creativity, memory, and semantic processing when compared to students who perform “brain-only” work. Students who relied on AI produced essays often look similar, but the students retain less memory and recognition of what they “wrote.”

This cognitive atrophy isn't limited to students; even highly educated professionals are vulnerable. One study found that doctors become less able to identify precancerous polyps when they become overly-reliant on AI diagnostic tools. The unused axe gets dull. When we stop exercising our cognitive skills, they weaken.

The Future of Work

And yet the conventional wisdom tells us that AI will become the future of work and that the current generation of students will need AI skills in many professional fields. A study from the Oxford Internet Institute shows that AI is actually increasing demand for certain human skills including critical thinking, complex problem solving, effective communication, and creative decision making, and that people who have those skills are offered 5-10% higher salaries. In the private sector, business analysts are also predicting that AI in the workplace will demand exactly these higher-order thinking skills, the exact skills that fade when we become overly-reliant on AI. Given a choice between doing the hard work to learn these skills or just outsourcing that work to an AI, it’s difficult to convince students to choose the harder path.

Next in this series, we’ll go into more depth on collaboratively developing a code of ethics with students in a way that devlops buy-in not just on the ethical standards, but on the cognitive and skill objectives from the beginning. The key is building relationships of trust, transparency, and candor in a time when it feels like everything can be faked.

Radical Candor

We are at the dawn of this radical transformation of humans that by its very nature is a truly complex and emergent innovation. Nobody on earth can predict what’s gonna happen. We’re on the event horizon of something… This is an uncontrolled experiment in which all of humanity is downstream.